Since there is no distribution of nano for DSM, we will have to build it. I searched around for some posts related to it, and lucky for me, I found this guy: http://pcloadletter.co.uk/2014/09/17/nano-for-synology/ Awesome! So, it must not be too bad, right? Well, maybe. I had to take some time to dissect the separate steps in there and figure out what was going on. There were a couple of extra 'hacky' steps and I wanted to see if and why they were needed.

Here's what I found and what I did, based on that original script.

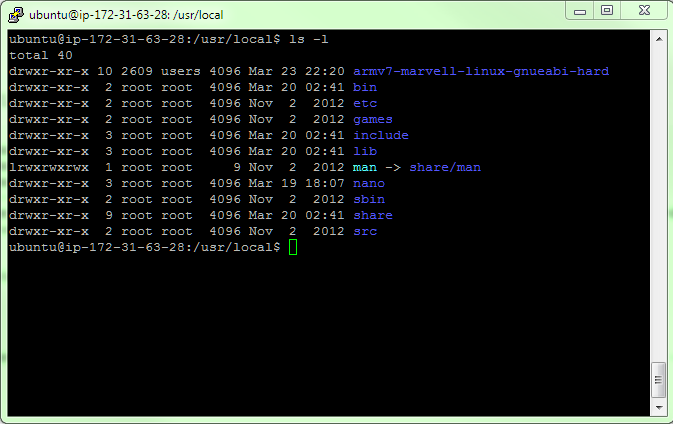

First, there's the typical setup of the environment variables for cross-compiling:

(I'm sure the extra CFLAGS aren't necessary here, since I'm sure the nano code isn't doing any floating point math...)

export TOOLCHAIN=/usr/local/armv7-marvell-linux-gnueabi-hard

export CC=${TOOLCHAIN}/bin/arm-marvell-linux-gnueabi-gcc

export CXX=${TOOLCHAIN}/bin/arm-marvell-linux-gnueabi-g++

export LD=${TOOLCHAIN}/bin/arm-marvell-linux-gnueabi-ld

export AR=${TOOLCHAIN}/bin/arm-marvell-linux-gnueabi-ar

export RANLIB=${TOOLCHAIN}/bin/arm-marvell-linux-gnueabi-ranlib

export CFLAGS="-I${TOOLCHAIN}/arm-marvell-linux-gnueabi/libc/include -mhard-float -mfpu=vfpv3-d16"

export LDFLAGS="-L${TOOLCHAIN}/arm-marvell-linux-gnueabi/libc/lib"

Next, there is a dependent library called ncurses, that is already installed on the DiskStation. We need to build it here, so that the nano code can link to it. Our friend at pcloadletter pulled that library from the DSM source that is available online and posted just the part we need on Dropbox (thanks!!), so we can download it and configure it for compiling. Here's what those steps look like:

wget https://dl.dropboxusercontent.com/u/1188556/ncurses-5.x.zip unzip ncurses-5.x.zip cd ncurses-5.x ./configure --prefix=/home/ubuntu/ncurses --host=armle-unknown-linux --target=armle-unknown-linux --build=i686-pc-linux --with-shared --without-manpages --without-normal --without-progs --without-debug --enable-widec

So far, it looks almost the same as the original script. I did, however, set the prefix parameter to point to /home/ubuntu/ncurses, which will be the target location for the 'make install' command. That directory doesn't exist, so we need to make it.

cd .. mkdir ncurses cd ncurses-5.x make make install

If that builds correctly, your /home/ubuntu/ncurses and /home/ubuntu/ncurses/lib directories should look like this:

Now we're (almost) ready to build nano. Let's get the nano source code and unzip it and create the target directory for the final executable.

cd ~ wget http://www.nano-editor.org/dist/v2.2/nano-2.2.6.tar.gz tar xvzf nano-2.2.6.tar.gz mkdir nano

Here's the part where I started to get a little confused with the original script. Why do we need to patch the nano source code and then why do we need to effectively patch (using sed, which I had to lookup, btw, since I had no idea what that was...) the generated Makefile? After much trial and error and poking around, it looks like there is a mismatch between the relative paths in the nano source and the paths that the configure input script are looking for.

The configure script is looking for ncursesw/ncurses.h somewhere on the search path and parts of the source code refer to ncurses.h, without a relative path. So, rather that having to change the source code, let's just put both path references in the include search path. So, we can append the CFLAGS path, append the library path (LDFLAGS) so that it points to the ncurses library we just built and also add a C++ include path (CPPFLAGS) like this:

export CFLAGS="${CFLAGS} -I/home/ubuntu/ncurses/include -I/home/ubuntu/ncurses/include/ncursesw"

export LDFLAGS="${LDFLAGS} -L/home/ubuntu/ncurses/lib"

export CPPFLAGS="-I/home/ubuntu/ncurses/include -I/home/ubuntu/ncurses/include/ncursesw"

Now we're ready to (finally) build nano:

cd nano-2.2.6 ./configure --prefix=/home/ubuntu/nano --host=armle-unknown-linux --target=armle-unknown-linux --build=i686-pc-linux --enable-utf8 --disable-nls --enable-color --enable-extra --enable-multibuffer --enable-nanorc make make install

If we haven't missed anything, then nano should build correctly and your /home/ubuntu/nano/bin directory looks like this:

Anyone know what the secret is to creating the final executable without the architecture name being tacked on? Also, now that I've built this, I really have no idea how to package this up and get it to the DiskStation so that all the folders are intact, etc. That's what I'll try to dig into next.